Container Orchestration has become increasingly popular in recent years due to the growing adoption of containerized microservices. By providing a means to organize and manage containers, as well as perform other essential tasks, container orchestration tools are crucial in ensuring that our services remain available and responsive.

However, before we delve into the intricacies of container orchestration, it’s important to understand the fundamentals of containers themselves. Check out our blog to learn about containers.

In this article, we will understand about Container Orchestration and the problem it solves. We will also discuss about Kubernetes the most popular Container Orchestration system.

Let’s dive in!

Why do we need Container Orchestration?

Containers have become a fundamental building block for modern software development, providing an efficient and portable way to package applications along with their dependencies. Microservices architecture has leveraged the power of containers to develop task-specific services that can be easily built, deployed, and updated. With continuous integration and delivery (CI/CD) pipelines, containers enable rapid and efficient software development.

However, as services grow in size and complexity, managing the sheer number of containers can become an overwhelming task. For instance, managing hundreds or even thousands of containers for tens or hundreds of services can quickly become a daunting challenge. This is where Container Orchestration comes in, automating the deployment, management, scaling, networking, and availability of containers.

What is Container Orchestration?

Container Orchestration is the process of automating the deployment, management, scaling, and networking of containerized applications. It involves managing large numbers of containers, along with their dependencies and networking, in a way that ensures high availability, reliability, and performance.

Some of the key tasks performed by Container Orchestration include:

Deployment: Container Orchestration platforms automate the deployment of containerized applications across multiple nodes, making it easy to manage and scale the application.

Scaling: Container Orchestration platforms can automatically scale container instances based on application demand, which helps to ensure that the application can handle sudden spikes in traffic.

Load balancing: Container Orchestration platforms provide load-balancing services that distribute traffic across multiple containers, ensuring that the application is always available and responsive.

Service discovery: Container Orchestration platforms provide tools for managing and discovering services running in containers, making it easier to manage complex distributed applications.

Resource allocation: Container Orchestration platforms allocate resources to containers based on the needs of the application, ensuring that resources are used efficiently.

Health monitoring: Container Orchestration platforms monitor the health of containers and services, automatically restarting failed containers or services.

Rollbacks and updates: Container Orchestration platforms make it easy to roll back to previous versions of an application or to perform rolling updates, allowing for seamless updates to the application without downtime.

Overall, Container Orchestration provides a comprehensive set of tools and services for managing containerized applications at scale, making it easier to develop and deploy applications in a cloud-native environment.

Here’s how Container Orchestration works in general:

The container image is built and pushed to a container registry.

The Container Orchestration platform pulls the container image from the registry and deploys it to a node in the cluster.

The platform automatically scales the number of container instances running based on the application demand, using tools like horizontal pod autoscaling (HPA) to add or remove container instances.

The platform provides a load balancer that distributes traffic across container instances.

The platform provides service discovery tools that allow the application to discover other services running within the cluster.

The platform monitors the health of the containers and restarts failed containers automatically.

The platform provides tools to perform rolling updates, allowing for updates to the application without downtime.

The platform can manage resources across the cluster, ensuring that each container instance has the resources it needs to run the application.

Kubernetes or K8s

Kubernetes is an open-source Container Orchestration platform originally developed by Google. It provides a powerful and flexible platform for managing containerized applications across multiple hosts and clouds. Kubernetes provides a comprehensive set of tools and services for automating the deployment, scaling, and management of containerized applications.

Kubernetes provides the following key features:

Container orchestration: Kubernetes automates the deployment and management of containers, providing a scalable and reliable platform for running containerized applications.

Service discovery and load balancing: Kubernetes provides tools for managing and discovering services running in containers, making it easier to manage complex distributed applications.

Self-healing: Kubernetes automatically restarts failed containers or reschedules them to other nodes in the cluster, ensuring high availability and reliability.

Horizontal scaling: Kubernetes can automatically scale container instances based on application demand, allowing the application to handle sudden spikes in traffic.

Rolling updates and rollbacks: Kubernetes makes it easy to perform rolling updates to an application without downtime or rollback to previous versions of the application.

Container storage orchestration: Kubernetes provides tools for managing persistent storage for containerized applications, making it easy to manage data and ensure data integrity.

Integration with other tools: Kubernetes integrates with a wide range of other tools and services, including monitoring and logging tools, continuous integration and delivery (CI/CD) pipelines, and cloud providers.

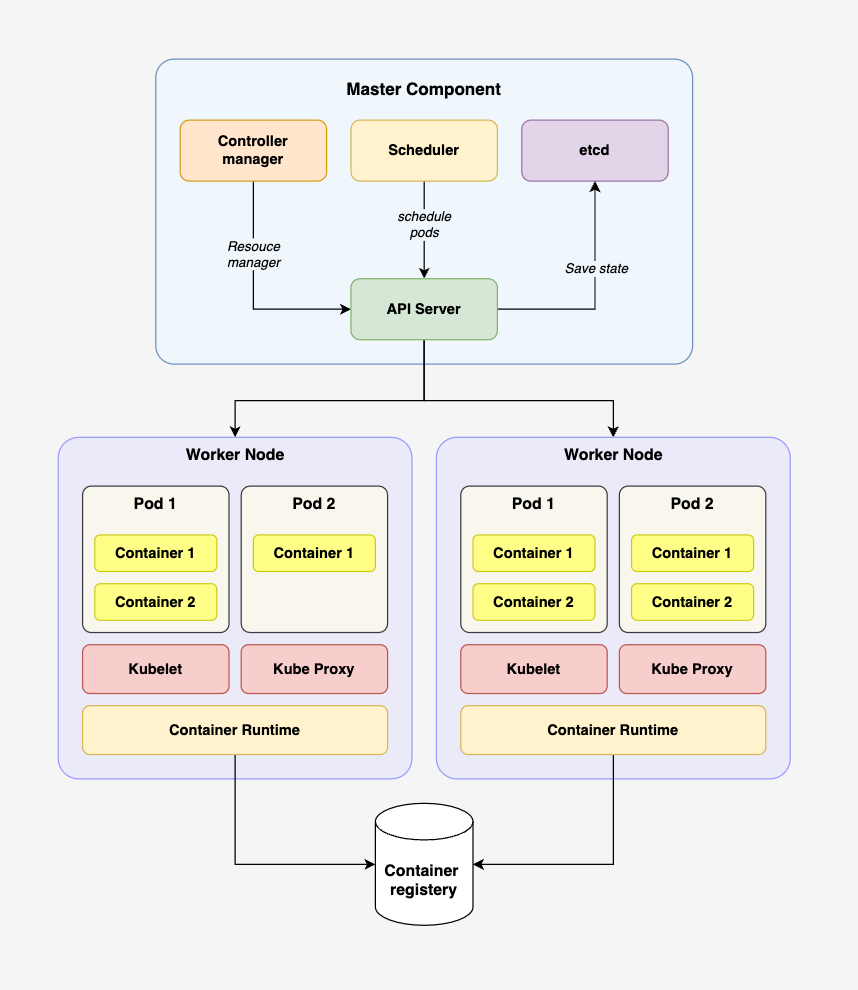

Component in Kubernetes

Master components

The master components are responsible for managing the Kubernetes cluster. They include the following components:

API server: The API server provides the interface for managing the Kubernetes cluster. It exposes the Kubernetes API, which is used by other components to communicate with the cluster.

etcd: etcd is a distributed key-value store used by the API server to store the configuration data of the Kubernetes cluster.

Controller manager: The controller manager is responsible for managing the various controllers that automate the deployment, scaling, and management of containerized applications.

Scheduler: The scheduler is responsible for scheduling containers on specific nodes in the cluster based on resource requirements, node capacity, and other factors.

Node components

The node components are responsible for running and managing containers. They include the following components:

Kubelet: The kubelet is responsible for managing containers running on a node.

kube-proxy: The kube-proxy provides networking services for containers running on a node.

Container runtime: The container runtime is responsible for running containers on a node.

Add-on components

Add-on components provide additional functionality to the Kubernetes cluster. They include the following components:

Ingress controller: The ingress controller manages incoming traffic to the cluster.

DNS: The DNS provides a naming service for containers running in the cluster.

Dashboard: The dashboard provides a web-based user interface for managing the Kubernetes cluster.

Container registry: The container registry is used to store container images.

Overall, these components work together to provide a powerful and flexible platform for managing containerized applications in a scalable, efficient, and reliable way.

Some of the key terminologies in Kubernetes are:

Pod: A pod is a smallest and simplest unit in the Kubernetes object model. It represents a single instance of a running process in a cluster and can contain one or more containers.

Container: A container is a lightweight, portable, and self-sufficient runtime environment for running applications. In Kubernetes, a container is typically deployed as part of a pod.

Node: A node is a physical or virtual machine in a Kubernetes cluster that runs one or more pods.

Cluster: A cluster is a group of nodes that work together to run containerized applications.

Deployment: A deployment is a Kubernetes object that manages the deployment of a set of pods. It provides declarative updates for rolling out new versions of an application.

Service: A service is a Kubernetes object that provides a stable IP address and DNS name for a set of pods. It enables load balancing and service discovery for applications running in a cluster.

ReplicaSet: A ReplicaSet is a Kubernetes object that manages a set of pods that are running the same application.

Namespace: A namespace is a virtual cluster that provides a way to divide a single Kubernetes cluster into multiple virtual clusters.

Volume: A volume is a directory that contains data that is accessible to containers in a pod.

ConfigMap: A ConfigMap is a Kubernetes object that provides a way to store configuration data as key-value pairs.

Secret: A Secret is a Kubernetes object that provides a way to store sensitive information, such as passwords or API keys.

Overall, Kubernetes provides a powerful and flexible platform for managing containerized applications, allowing developers to focus on building and improving their applications, while Kubernetes takes care of the underlying infrastructure management.

That’s all about “Container Orchestration and Kubernetes”. Send us your feedback using the message button below. Your feedback helps us create better content for you and others. Thanks for reading!

If you like the article please like and share. To show your support!

Thanks.